Perplexity Model Explained: Key Uses & Benefits in 2025

Welcome to the world of Natural Language Processing (NLP) and the perplexity model. If you’re interested in evaluating language models, machine learning, AI, and artificial intelligence, then this guide is for you. In this article, we will dive deep into the concept of perplexity and explore its significance in NLP.

Key Takeaways:

- Perplexity is a metric used to evaluate models in Natural Language Processing (NLP).

- Language models assign probabilities to words and sentences, allowing them to predict the next word based on previous words.

- Perplexity can be defined as the normalised inverse probability of the test set or as the exponential of the cross-entropy.

- Perplexity and burstiness can be used to detect AI-generated text by analyzing the model’s performance and content variability.

- Understanding perplexity and burstiness helps to better comprehend AI-generated text and detect its presence.

A Quick Recap of Language Models

In the field of Natural Language Processing (NLP), language models play a crucial role in understanding and generating text. A language model is a statistical model that assigns probabilities to words and sentences, enabling it to predict the next word in a sentence based on the context of the previous words.

There are different types of language models, with the most basic being the unigram model. Unigram models consider individual words independently and assign probabilities to each word based on its frequency in the training data.

On the other hand, n-gram models take into account the context of the previous (n-1) words to predict the next word. By considering the surrounding words, n-gram models provide a more accurate representation of the language, capturing dependencies and maintaining context within the text.

A table can be used to highlight the differences between unigram and n-gram models:

| Models | Key Features |

|---|---|

| Unigram Model | Considers individual words independently |

| n-gram Model | Takes into account the context of the previous (n-1) words |

Understanding the fundamentals of language models, such as the distinction between unigram and n-gram models, is crucial for comprehending the evaluation and application of these models in various NLP tasks.

Evaluating Language Models

When it comes to evaluating language models, there are two main approaches: intrinsic evaluation and extrinsic evaluation.

Intrinsic evaluation focuses on measuring the properties of the language model itself. This includes metrics such as perplexity, which is a widely used evaluation metric in NLP. Perplexity measures how well the model predicts the test set, with lower perplexity indicating a better model. It takes into account the probabilities assigned to the test set by the language model.

Extrinsic evaluation, on the other hand, measures the performance of the language model on specific tasks. This involves using the model to complete language tasks, such as text classification or machine translation, and evaluating its performance based on predefined metrics. Extrinsic evaluation provides a more practical assessment of how well the language model performs in real-world applications.

Evaluating Language Models

When evaluating language models, it is important to consider both intrinsic and extrinsic evaluation. Intrinsic evaluation provides insights into the model’s internal properties, such as its ability to assign probabilities to words and sentences. Extrinsic evaluation, on the other hand, focuses on the model’s performance on specific tasks, which is crucial for assessing its practical usefulness.

By utilizing metrics such as perplexity and conducting both intrinsic and extrinsic evaluations, researchers and practitioners can gain a comprehensive understanding of a language model’s performance and capabilities. This information can then be used to make informed decisions about the model’s suitability for different language tasks.

| Evaluation Approach | Focus | Metrics |

|---|---|---|

| Intrinsic Evaluation | Internal properties of the model | Perplexity |

| Extrinsic Evaluation | Performance on specific tasks | Task-specific metrics |

In conclusion, evaluating language models requires a thorough understanding of both intrinsic and extrinsic evaluation. Perplexity, as an intrinsic evaluation metric, provides insights into the model’s internal properties. Extrinsic evaluation, on the other hand, focuses on the model’s performance on specific tasks. By considering both approaches and utilizing relevant metrics, researchers can gain valuable insights into the model’s performance and make informed decisions regarding its applicability to different language tasks.

Perplexity as the Normalised Inverse Probability of the Test Set

In the context of evaluating language models, perplexity can be defined as the normalised inverse probability of the test set. This mathematical metric provides valuable insights into how well a model predicts the test set and can be a useful tool in assessing model performance. A lower perplexity score indicates a better-performing model.

To calculate perplexity, we take the inverse probability of the test set and normalise it by the number of words in the test set. The resulting perplexity score provides a measure of how surprised the model is by the test set. A lower perplexity score means that the model was better able to predict the test set, suggesting a higher degree of accuracy.

Perplexity Equation

The perplexity equation can be represented as follows:

Perplexity = 2^(-1/N * log(P(w1, w2, …, wN)))

Where N is the number of words in the test set and P(w1, w2, …, wN) is the probability of the test set according to the model. The log function is applied to the probability to ensure the perplexity remains a positive value.

| Test Set | Model Probability |

|---|---|

| This is a test sentence. | 0.0032 |

| Another example sentence. | 0.0018 |

In the example above, we have a test set consisting of two sentences. The model assigns a probability of 0.0032 to the first sentence and a probability of 0.0018 to the second sentence. Using the perplexity equation, we can calculate the perplexity score for this test set, providing a measure of how well the model predicts this particular data.

Perplexity as the Exponential of the Cross-Entropy

Perplexity, as we mentioned earlier, can be defined in two ways when it comes to evaluating language models. We’ve already covered one definition, which is perplexity as the normalised inverse probability of the test set. Now, let’s explore the second definition: perplexity as the exponential of the cross-entropy. This alternative view provides another perspective on how perplexity can be interpreted and calculated.

The cross-entropy is a measure of the average number of bits needed to store the information in a variable. In the context of language models, it represents how well the model predicts the actual distribution of words in a given dataset. When we take the exponential of the cross-entropy, we obtain the perplexity score. This score can be seen as a weighted branching factor, indicating the average number of words that can be encoded using a certain number of bits.

The relationship between perplexity and cross-entropy is derived from the Shannon-McMillan-Breiman theorem, which states that the exponential of the entropy of a random variable is equal to the number of distinct values it can take on. In the case of language models, the entropy corresponds to the cross-entropy, and the number of distinct values corresponds to the perplexity. Therefore, by examining the cross-entropy and its exponential counterpart, we gain insights into the complexity and predictability of a language model.

To summarize, perplexity as the exponential of the cross-entropy offers a different perspective on evaluating language models. It quantifies the average number of words that can be encoded with a certain number of bits, shedding light on the model’s predictability and branching factor. By understanding both definitions of perplexity, we can gain a comprehensive understanding of how language models perform and contribute to the field of natural language processing.

Artificial Intelligence, Copywriting, and Creativity

Artificial Intelligence (AI) has revolutionized many industries, including the field of copywriting. Language models, powered by AI, have the ability to generate content that mimics human writing styles and is often indistinguishable from text written by a human. This has opened up new possibilities for businesses and marketers looking to create engaging and persuasive content.

Copywriting is an art that requires creativity and an understanding of the target audience. AI-powered language models can analyze vast amounts of data and learn patterns to generate text that is tailored to specific audiences. These models can generate headlines, blog posts, social media captions, and even product descriptions. By using AI in copywriting, businesses can save time and resources while still producing high-quality content.

Creativity is an essential aspect of copywriting, and many may wonder if AI can truly replicate this human trait. While AI can generate content that is grammatically correct and coherent, it may lack the intuition and deeper understanding that humans possess. However, AI can be a valuable tool for copywriters, helping them generate ideas, refine their writing, and experiment with different styles and tones.

The Role of Language Models in Copywriting

Language models are at the heart of AI-powered copywriting. These models are trained on massive amounts of text data, enabling them to understand language patterns and generate text that sounds natural and human-like. By leveraging these language models, copywriters can automate the content creation process and generate high volumes of tailored content.

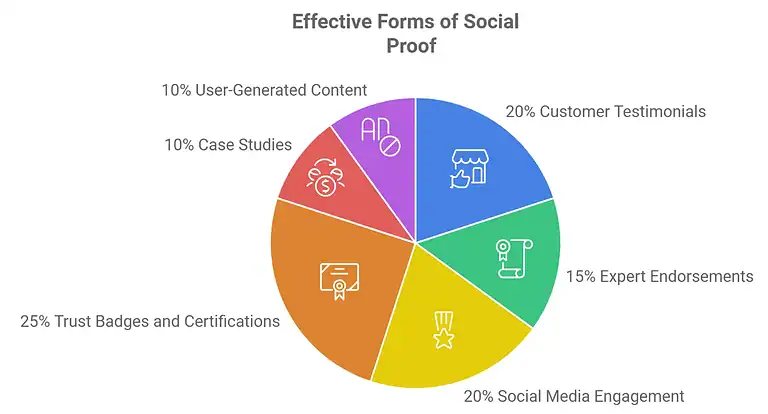

However, it’s important to note that AI-generated content should still be evaluated using metrics such as perplexity and burstiness. Perplexity measures how well the language model predicts the next word, while burstiness evaluates the variation and spontaneity of the generated content. These metrics help ensure that the AI-generated text aligns with the brand’s voice and resonates with the target audience.

| Perplexity | Burstiness |

|---|---|

| Perplexity is a metric used to measure how well a language model predicts the next word in a sequence of words. A lower perplexity score indicates that the model is better at predicting the next word. | Burstiness measures the predictability of a piece of content. It evaluates the homogeneity of sentence lengths, structures, and tempos. Higher burstiness indicates greater variability and spontaneity in the content. |

In conclusion, AI has transformed the world of copywriting by enabling businesses to generate high-quality content at scale. Language models, powered by AI, can mimic human writing styles and create tailored content for specific audiences. While AI-generated content can save time and resources, it should still be evaluated using metrics like perplexity and burstiness to ensure its quality and effectiveness.

Burstiness and Its Role in AI-Generated Content

In the world of artificial intelligence and copywriting, burstiness plays a crucial role in understanding the quality and authenticity of AI-generated content. Burstiness measures the predictability of a piece of content based on various factors such as sentence lengths, structure, and tempos. It helps us determine the level of content variability and spontaneity, which are important indicators of human-like text.

When analyzing burstiness in AI-generated content, one noticeable trend is its lower level compared to human-generated content. AI tends to produce text with more uniformity in sentence lengths, structure, and tempos. This lack of burstiness can make the generated content appear less varied and spontaneous, which can be a telltale sign of machine-generated text.

“Burstiness measures the predictability of a piece of content based on various factors such as sentence lengths, structure, and tempos.”

For instance, let’s consider the sentence lengths in a paragraph of AI-generated text. If all the sentences have similar lengths, without any significant variation, it suggests a lack of natural flow and human-like expression. Similarly, if the structure of the text remains consistently rigid, with little variation in paragraph composition or sentence order, it can further indicate that the content is AI-generated.

Table: Burstiness Analysis Comparison

| Feature | Human-Generated Content | AI-Generated Content |

|---|---|---|

| Sentence Lengths | Varying lengths, reflecting human expression | Similar lengths, lacking natural flow |

| Structure | Varied paragraph composition and sentence order | Consistently rigid structure |

| Tempos | Dynamic pace, reflecting human thought process | Unchanging tempo, lacking spontaneity |

By considering burstiness, we can gain valuable insights into the authenticity and human-like nature of AI-generated content. Evaluating the predictability and content variability allows us to distinguish between machine-generated and human-generated text, leading to improved detection methods and enhanced understanding of AI’s impact on content creation.

Detecting AI-Generated Text with Perplexity and Burstiness

If you ever come across a piece of text that seems too good to be true, it might be worth investigating whether it was generated by artificial intelligence (AI). With advancements in natural language processing (NLP) and machine learning, AI-generated text has become more sophisticated. However, there are ways to detect such content using metrics like perplexity score and burstiness measures.

Perplexity Score

Perplexity is a metric commonly used in NLP to evaluate the performance of language models. When it comes to detecting AI-generated text, a higher perplexity score can be an indication that the text was not generated by a human. Perplexity measures how well the language model predicts a given text, with lower scores suggesting better predictions. Therefore, a higher perplexity score suggests that the text is less likely to have been generated by a human and more likely to be AI-generated.

Burstiness Measures

Burstiness refers to the predictability or lack of variability in the structure, sentence lengths, and tempos of a piece of text. Human-generated content tends to exhibit more burstiness, as it is characterized by variations and spontaneity. On the other hand, AI-generated text often lacks this burstiness and appears more uniform. By measuring the burstiness of a text, we can gain insights into its origin. Lower burstiness measures may suggest that the text was generated by AI rather than a human.

| Perplexity Score | Burstiness Measures |

|---|---|

| Higher score indicates AI-generated text | Lower measures suggest AI-generated text |

To detect AI-generated text, natural language processing tools or AI-based content analyzers can be employed. These tools utilize algorithms and machine learning techniques to calculate perplexity scores and burstiness measures, providing valuable insights into the nature of the text. By leveraging these metrics, researchers and content creators can identify AI-generated text and distinguish it from human-generated content.

Conclusion

Perplexity is a valuable metric in the field of Natural Language Processing (NLP) and the evaluation of language models. It provides insights into the performance and accuracy of these models, which play a significant role in the development of Artificial Intelligence (AI) and Machine Learning (ML) systems.

By understanding perplexity, we can better assess how well a language model predicts the next word or sentence based on the previous words. Lower perplexity scores indicate a better model, as it demonstrates a higher level of accuracy in predicting the test set. Evaluating language models through intrinsic measures like perplexity allows us to gauge their effectiveness without relying on specific tasks or objectives.

In addition to perplexity, burstiness is another factor to consider when analyzing AI-generated text. Burstiness measures the predictability and variability of content, including factors such as sentence lengths, structures, and tempos. AI-generated text tends to have lower burstiness compared to human-generated text, which exhibits more diversity and spontaneity.

By utilizing NLP tools or AI-based content analyzers, we can detect AI-generated text by assessing the perplexity scores and burstiness measures. Higher perplexity scores and lower burstiness indicate a higher likelihood of machine-generated text. Understanding these metrics helps us navigate the complex landscape of AI-generated content and assess its presence accurately.

FAQ

What is perplexity and why is it important in Natural Language Processing (NLP)?

Perplexity is a metric used to evaluate language models in NLP. It measures how well the model predicts a given test set, with lower perplexity indicating better performance. Understanding perplexity is crucial in assessing the accuracy and effectiveness of language models in NLP tasks.

How do language models work and what are the different types?

Language models are statistical models that assign probabilities to words and sentences. They can predict the next word in a sentence based on previous words. There are two main types of language models – unigram models, which consider individual words, and n-gram models, which consider the previous (n-1) words.

What is the difference between intrinsic and extrinsic evaluation of language models?

Intrinsic evaluation measures properties of the language model itself, while extrinsic evaluation measures the model’s performance on a specific task. Perplexity is an intrinsic evaluation metric commonly used in NLP to assess the quality of language models.

How is perplexity defined as the normalised inverse probability of the test set?

Perplexity can be defined as the normalised inverse probability of the test set. It calculates how well the language model predicts the test set, and lower perplexity scores indicate better model performance. The perplexity is calculated by taking the inverse probability of the test set and normalising it by the number of words in the test set.

Can you explain perplexity as the exponential of cross-entropy?

Perplexity can also be defined as the exponential of cross-entropy. Cross-entropy measures the average number of bits needed to store the information in a variable. Perplexity, in this context, represents the weighted branching factor, indicating the average number of words that can be encoded using a certain number of bits.

How do language models play a role in artificial intelligence and copywriting?

Language models are used in artificial intelligence to generate content. They can create text based on statistical patterns and predictions. Evaluating the quality and human-like nature of this generated content can be done using metrics like perplexity and burstiness.

What is burstiness and how does it relate to AI-generated content?

Burstiness measures the predictability of content based on the homogeneity of sentence lengths, structures, and tempos. AI-generated content tends to have lower burstiness compared to human-generated content, which is more varied and spontaneous.

How can perplexity and burstiness be used to detect AI-generated text?

Perplexity and burstiness can be used as measures to identify AI-generated text. Higher perplexity scores and lower burstiness indicate that the text is likely machine-generated. Tools like natural language processing and AI-based content analyzers can calculate these measures and assist in identifying AI-generated content.

What is the significance of perplexity in evaluating language models?

Perplexity is a valuable metric for evaluating language models in NLP. It provides insights into the model’s performance, accuracy, and ability to predict text. Understanding perplexity helps researchers and practitioners assess the effectiveness of language models in various NLP tasks.

Source Links

- https://surge-ai.medium.com/evaluating-language-models-an-introduction-to-perplexity-in-nlp-f6019f7fb914

- https://medium.com/the-generator/the-dummy-guide-to-perplexity-and-burstiness-in-ai-generated-content-1b4cb31e5a81

- https://towardsdatascience.com/perplexity-in-language-models-87a196019a94

I’m Alexios Papaioannou, an experienced affiliate marketer and content creator. With a decade of expertise, I excel in crafting engaging blog posts to boost your brand. My love for running fuels my creativity. Let’s create exceptional content together!